SciLake at the EOSC-CoARA workshop for Advancing Research Assessment

SciLake participates in EOSC-CoARA workshop for Advancing Research Assessment

On April 10th, 2024, Thanasis Vergoulis from Athena RC, project coordinator of SciLake, represented our project at the EOSC-CoARA workshop for advancing research assessment, presenting how SciLake is contributing to open infrastructures for research assessment. This blog post summarizes the key takeaways from the event.

CoARA and EOSC Commitments

The workshop hosted discussions on advancing the European Research Area's Policy Agenda for 2022-2024 focusing on promoting open sharing and reuse of research outputs and improving research assessment systems (i.e., Actions 1 and 2). To this end, the workshop aimed at collaboration and strategic alignment between the Coalition for Advancing Research Assessment (CoARA) and the European Open Science Cloud (EOSC), two relevant, pivotal initiatives: CoARA aims to reform research assessment practices and policies to recognize a broader range of scientific contributions while promoting transparency and inclusivity. EOSC, on the other hand, provides the necessary infrastructure for easy access to research data across Europe. Together, they ensure that the new assessment criteria encouraged by CoARA align with the open and interoperable data environment EOSC provides. This collaboration fosters a research landscape that is more collaborative, transparent, inclusive, and effective in advancing open science principles across Europe.

In this context, SciLake, PathOS, OPUS, and GraspOS have been invited to inform stakeholders about their project goals and status. Their mission is to align with the overarching vision of the European Commission, EOSC, and CoARA in reforming research assessment. The discussion contributed to the publication of the European Commission's Action Plan To Implement The Ten Commitments On The Agreement on Reforming the Research Assessment.

SciLake's Mission

As an INFRAEOSC project, SciLake is expected to have substantial and long-term impact in the EOSC ecosystem. During the workshop, Thanasis Vergoulis presented the main objectives of our project highlighting its three key contributions in the research assessment field:

- Enhancing the interoperability and accessibility of Scientific Knowledge Graphs (SKGs)

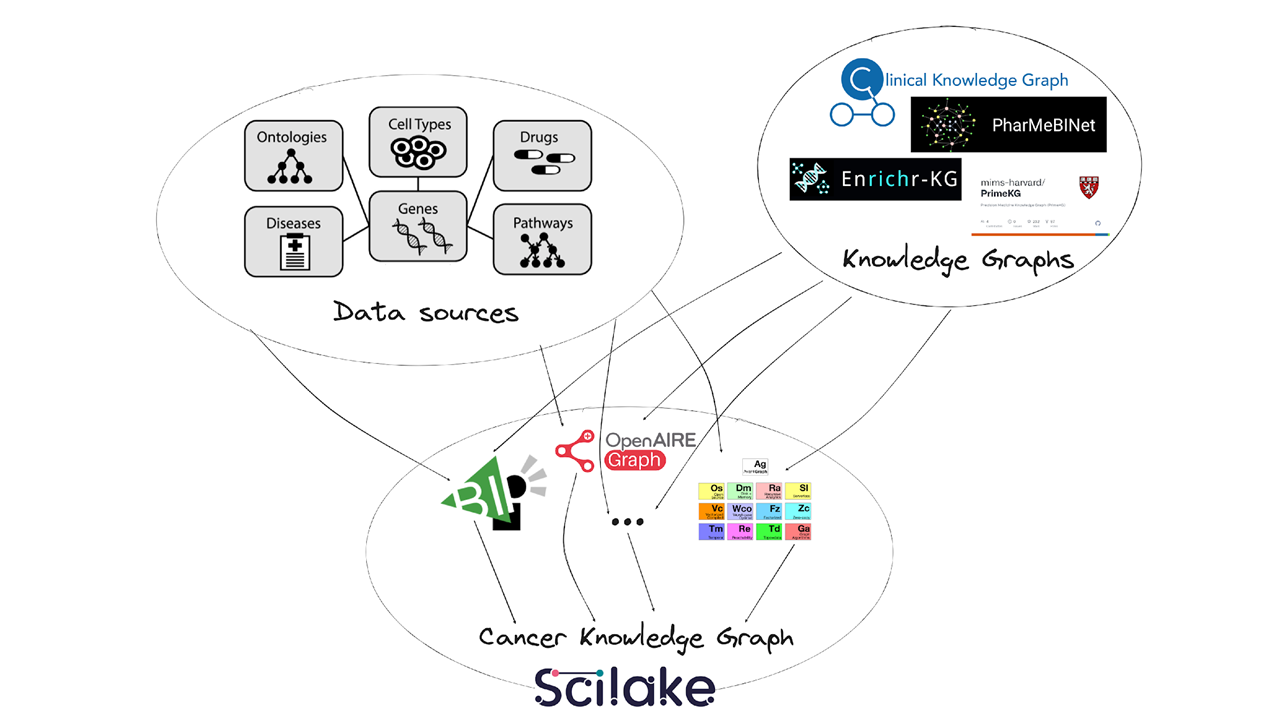

Scientific Knowledge Graphs (SKGs) form the fundamental input for research assessment practices. Enhancing the interoperability and accessibility of SKGs is a key focus of SciLake, and thus SciLake is expected to significantly facilitate research assessment practices. SciLake researchers collaborate closely with the members of the Research Data Alliance's (RDA) SKG-Interoperability Framework group to refine a common data exchange model for domain-agnostic SKGs. At the same time, SciLake extends this work by leading the effort to design extensions to the basic SKG-IF model, supporting domain-specific entities and relationship types in the fields of the project pilots. Regarding accessibility, SciLake’s APIs offer advanced graph querying capabilities through the AvantGraph analytics engine. This enhances the simplicity and efficiency of SKG querying, aiding in the revelation of research insights and further supporting effective research assessment.

- Developing modules that provide enrichments for research data that can be valuable for research assessment use cases.

Among the various modules SciLake is developing, some are specifically designed to add value to research data, making them highly beneficial for implementing research assessment practices. Indicative examples include the module that produces classifications of research works based on relevant Fields of Science and the modules that calculate a range of indicators that can offer valuable insights into various aspects of citation-based scientific impact or the reproducibility of research works.

- Recognising and incorporating discipline-specific contributions.

SciLake is closely collaborating with domain experts conducting four pilots focused on analyzing research in distinct scientific fields: Neuroscience, Cancer research, Transportation research, and Energy research. This work is invaluable for identifying domain-specific research activities and contributions, enabling them to be effectively tracked and recognized within research assessment practices.

Conclusions

In conclusion, SciLake is contributing to research assessment through its innovations in the field of Scientific Knowledge Graphs enhancing their interoperability, generating deeper research insights, and incorporating discipline-specific contributions. Through these efforts, SciLake is laying the groundwork for a more cohesive and responsible approach to research assessment.

The slides of the presentation can be found on Zenodo: https://zenodo.org/records/10959719

Read more …SciLake at the EOSC-CoARA workshop for Advancing Research Assessment